Graph Analytics with Spark GraphFrames

GraphX has never a huge success and was linked to RDD. GraphFrames is based on DataFrames and seems to take off. The scalability gives mixed results and if your graphs are below 100K edges you will have no issues, dealing with billions of links is however not so straightforward. At least, at this moment. By the time you read this things might be in version 1+ and soaring.

Things below are based on PySpark but things work just as easily with Scala. There are various articles explaining how to load GraphFrames in the context of Spark. It all amounts to something like

import pyspark

from pyspark import SparkContext, SparkConf, SQLContext

from pyspark.sql import SparkSession

conf = SparkConf().setMaster("local")

sc = SparkContext(conf=conf)

spark = SparkSession.builder.appName('MyApp').getOrCreate()

sc.addPyFile("~/somehwere/graphframes-0.5.0-spark2.1-s_2.11.jar")

but with Spark and the ever-changing Apache landscape be prepared for some fiddling. Although I don’t want to advertise any particular service, I found that using Databricks is the easiest way to get going.

Import the namespace

from graphframes import *

and create some vertices via dataframes. For example

vertices = sqlContext.createDataFrame([

("a", "Alice", 34),

("b", "Bob", 36),

("c", "Charlie", 30),

("d", "David", 29),

("e", "Esther", 32),

("f", "Fanny", 36),

("g", "Gabby", 60)], ["id", "name", "age"])

edges = sqlContext.createDataFrame([

("a", "b", "friend"),

("b", "c", "follow"),

("c", "b", "follow"),

("f", "c", "follow"),

("e", "f", "follow"),

("e", "d", "friend"),

("d", "a", "friend"),

("a", "e", "friend")

], ["src", "dst", "relationship"])

which can be combines into a graph:

g = GraphFrame(vertices, edges)

Once you have a graph you can get all sorts of things out of it:

- g.edges

- g.degrees, g.inDegrees and g.outDegrees

- counting filtered edges with g.edges.filter(“relationship = ‘follow'”).count()

Things like Microsoft’s CosmosDB uses Apache Gremlins, triples stores use SPARQL and Neo4J invented its own dialect. With GraphFrames you have motifs and it looks like this

motifs = g.find("(a)-[e]->(b); (b)-[e2]->(a)")

print(motifs)

or something which looks like an SQL where-clause:

filtered = motifs.filter("b.age > 30 or a.age > 30")

print(filtered)

Creating subgraphs goes via a subset of edges. For example, extracting the people who are friends an who are more than 30 years old:

paths = g.find("(a)-[e]->(b)")\

.filter("e.relationship = 'follow'")\

.filter("a.age < b.age")

# The `paths` variable contains the vertex information, which we can extract:

e2 = paths.select("e.src", "e.dst", "e.relationship")

g2 = GraphFrame(g.vertices, e2)

Graph algorithms

GraphFrames comes with a number of standard graph algorithms built in:

- Breadth-first search (BFS)

- Connected components

- Strongly connected components

- Label Propagation Algorithm (LPA)

- PageRank

- Shortest paths

- Triangle count

For instance, a breadth-first traversal starting from Esther for users of age less than 32:

paths = g.bfs("name = 'Esther'", "age < 32")

print(paths)

The connected components are just as easy:

result = g.connectedComponents() print(result)

Something that in many applications (say, marketing) will be appreciated is the page rank:

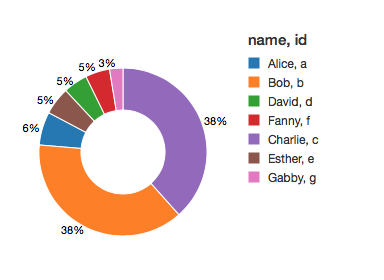

results = g.pageRank(resetProbability=0.15, tol=0.01) print(results.vertices)

giving something like

There are many more things you can (should) try out: triangle count, shortest paths (Dijkstra and such). Overall the framework feels 'right' and on the right track, unlike GraphX.

There are not many alternatives if you are looking for terabyte-size graphs. Apache Giraph is an options and it seems Facebook is using it. Which says a lot:

Since its inception, Giraph has continued to evolve, allowing us to handle Facebook-scale production workloads but also making it easier for users to program. In the meantime, a number of other graph processing engines emerged. For instance, the Spark framework, which has gained adoption as a platform for general data processing, offers a graph-oriented programming model and execution engine as well.

Some of the pro/con are summarized here. Though (large) graphs are everywhere it's a bit surprising that big-graph framework are still so little used. There is some overlap with semantic (triple) stores and solutions like Neo4J, graph support in SQLServer 2017 on and alike but still. What works best is really a research on its own in function of data and (business) aims.